PCables AI Interconnects - Page 5

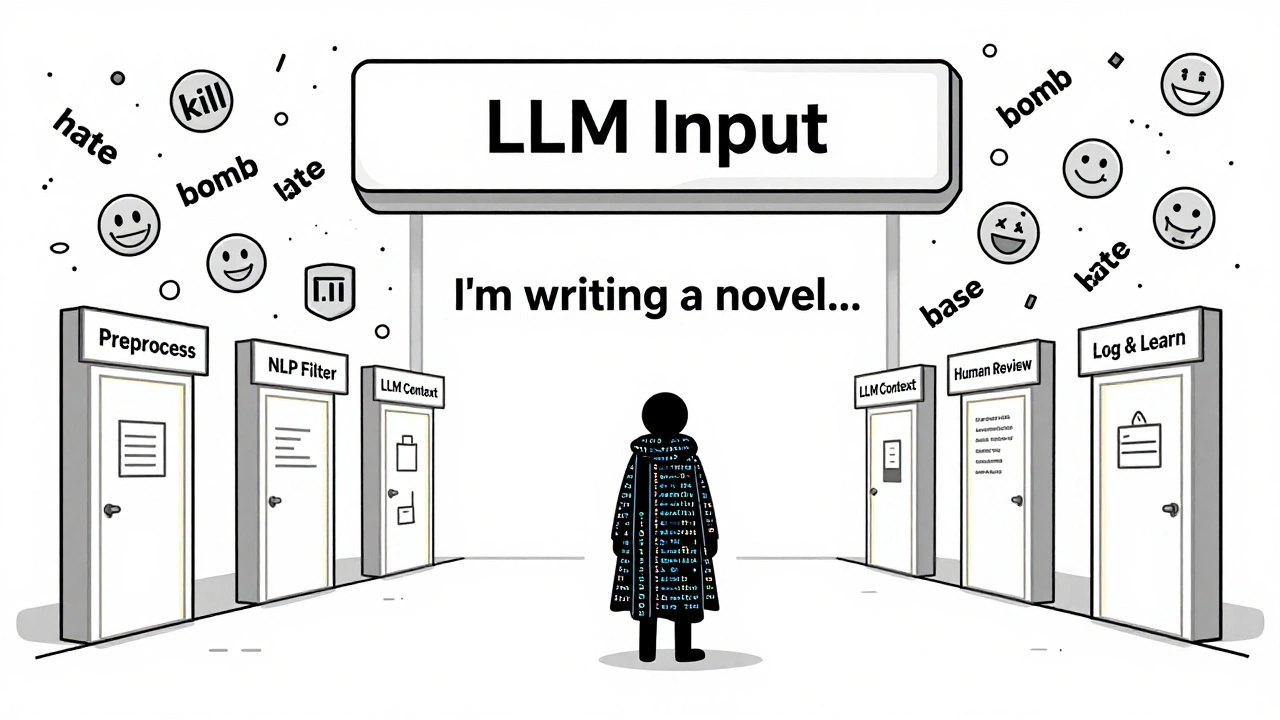

Learn how modern content moderation pipelines use AI and human review to block harmful user inputs to LLMs. Discover the best practices, costs, and real-world systems keeping AI safe.

Learn how streaming, batching, and caching reduce LLM response times. Real-world techniques used by AWS, NVIDIA, and vLLM to cut latency under 200ms while saving costs and boosting user engagement.

Learn how to accurately allocate LLM costs across teams using dynamic chargeback models that track token usage, RAG queries, and AI agent loops. Stop guessing. Start optimizing.

Generative AI is evolving into autonomous agents that plan, act, and adapt-driven by falling costs and better grounding in real data. Companies that adopt these systems now will lead the next wave of productivity.

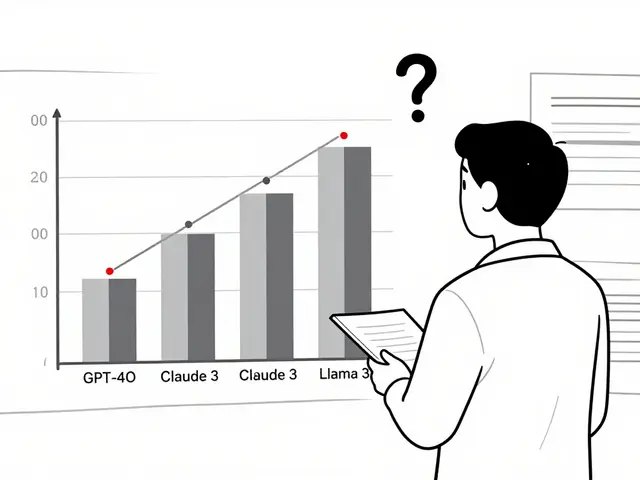

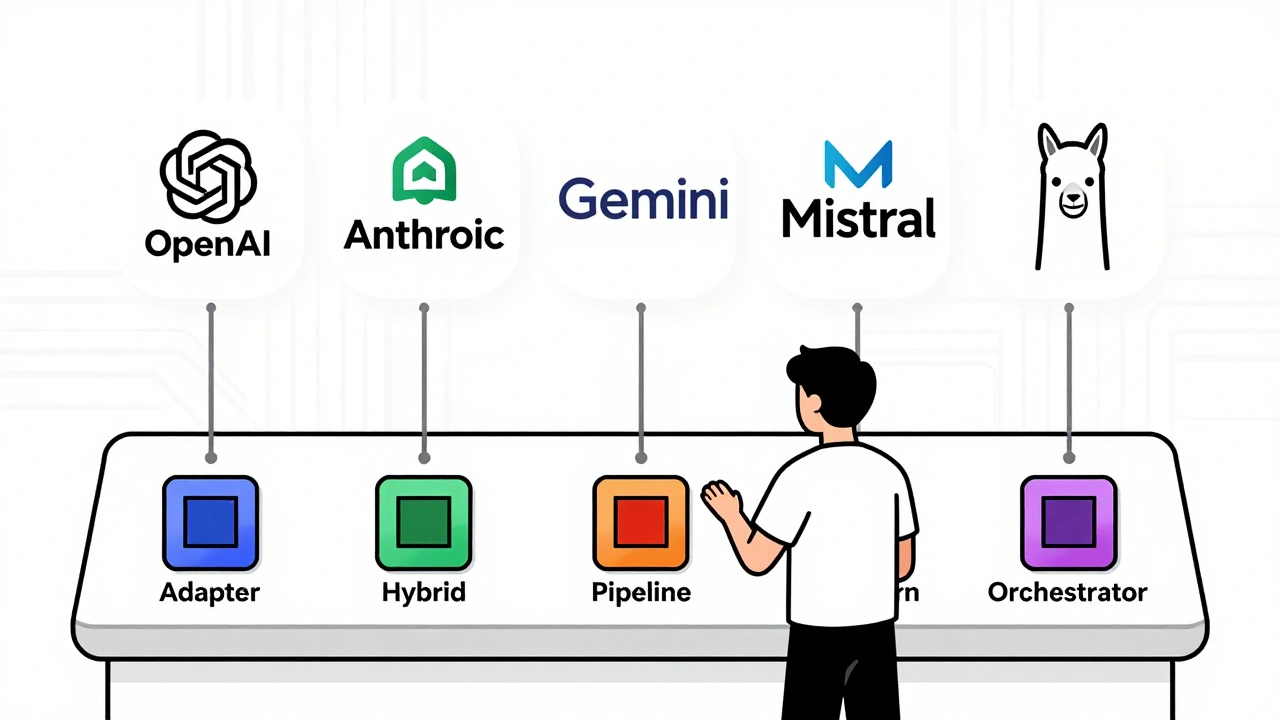

Learn how to abstract LLM providers using proven interoperability patterns like LiteLLM and LangChain to avoid vendor lock-in, cut costs, and handle model switching safely. Real-world examples and 2025 best practices included.

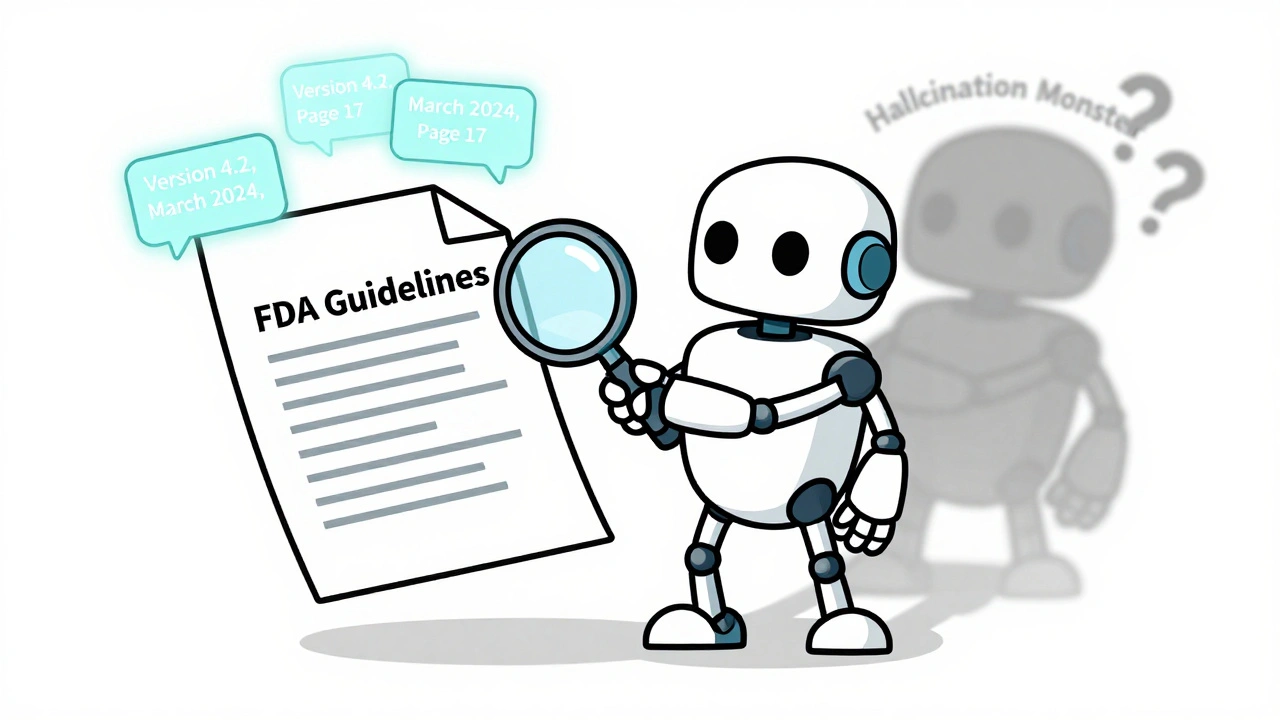

Citations in RAG systems turn AI guesses into verifiable facts. Learn how to implement accurate, trustworthy citations using real-world frameworks, data prep tips, and the latest 2025 research to meet regulatory demands and build user trust.

Learn how calibration and outlier handling keep quantized LLMs accurate when compressed to 4-bit. Discover which techniques work best for speed, memory, and reliability in real-world deployments.

Fine-tuned LLMs outperform general models in niche tasks like legal analysis, medical coding, and compliance. Learn how specialization beats scale, when to use QLoRA, and why hybrid RAG systems are the future.

Generative AI is transforming how businesses create product descriptions, emails, and social posts-cutting production time by up to 70% while scaling personalization. Learn how to use it effectively without losing brand voice or authenticity.

Artificial Intelligence

Artificial Intelligence