Category: Artificial Intelligence - Page 3

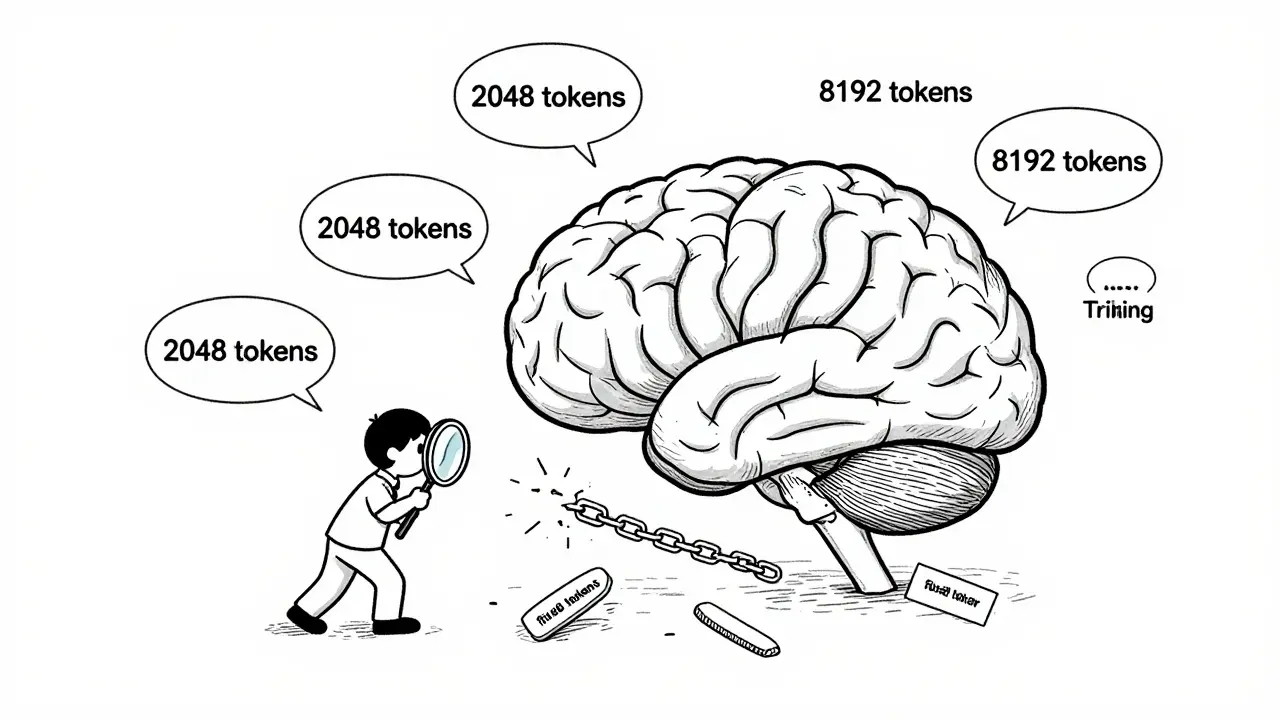

Training duration and token counts alone don't determine LLM generalization. How sequence lengths are structured during training matters more-variable-length curricula outperform fixed-length approaches, reduce costs, and unlock true reasoning ability.

Enterprise vibe coding boosts development speed but demands strict governance. Learn how to implement security, compliance, and oversight to avoid costly mistakes and unlock real productivity gains.

Caching is essential for AI web apps to reduce latency and cut costs. Learn how to start with prompt caching, semantic search, and Redis to make your AI responses faster and cheaper.

Chunking strategies determine how well RAG systems retrieve information from documents. Page-level chunking with 15% overlap delivers the best balance of accuracy and speed for most use cases, but hybrid and adaptive methods are rising fast.

Disaster recovery for large language models requires specialized backups and failover strategies to protect massive model weights, training data, and inference APIs. Learn how to build a resilient AI infrastructure that minimizes downtime and avoids costly outages.

Large language models are transforming localization by understanding context, tone, and culture - not just words. Learn how they outperform traditional translation tools and what it takes to use them safely and effectively.

Multimodal AI understands text, images, audio, and video together-making it far more accurate than text-only systems. Learn how it's transforming healthcare, customer service, and retail with real-world results.

Small changes in how you phrase a question can drastically alter an AI's response. Learn why prompt sensitivity makes LLMs unpredictable, how it breaks real applications, and proven ways to get consistent, reliable outputs.

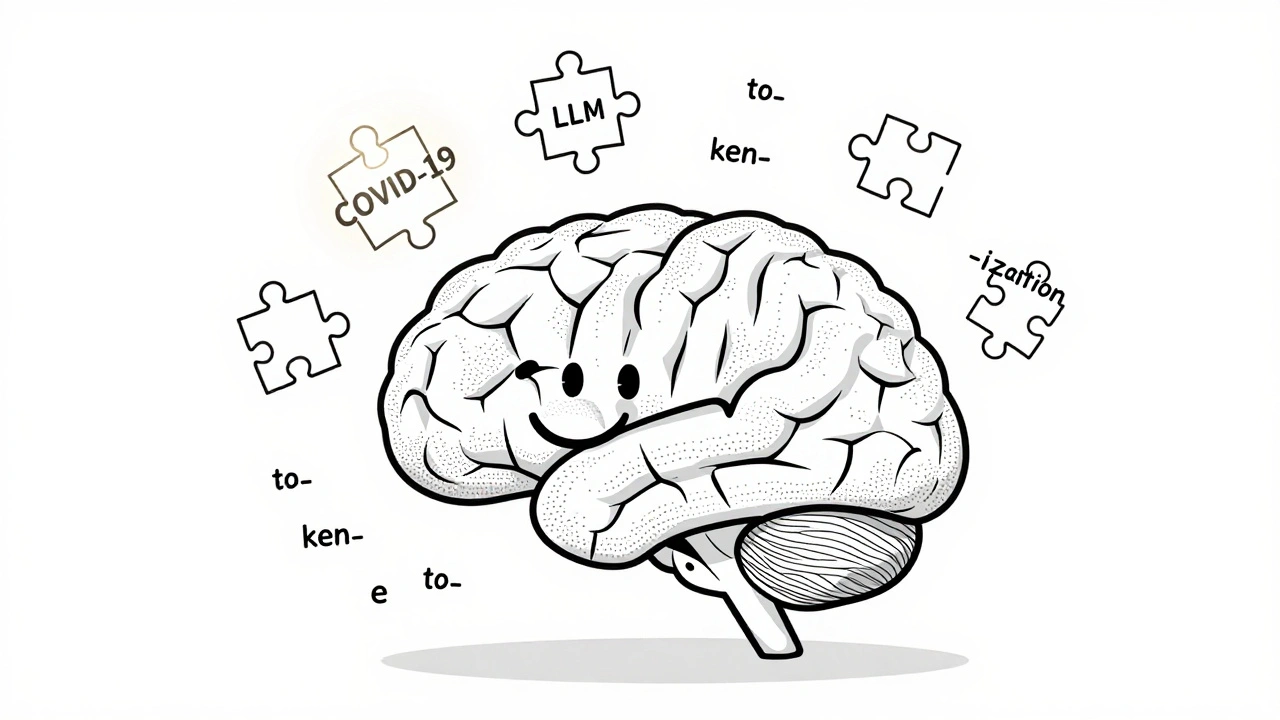

Despite the rise of massive language models, tokenization remains essential for accuracy, efficiency, and cost control. Learn why subword methods like BPE and SentencePiece still shape how LLMs understand language.

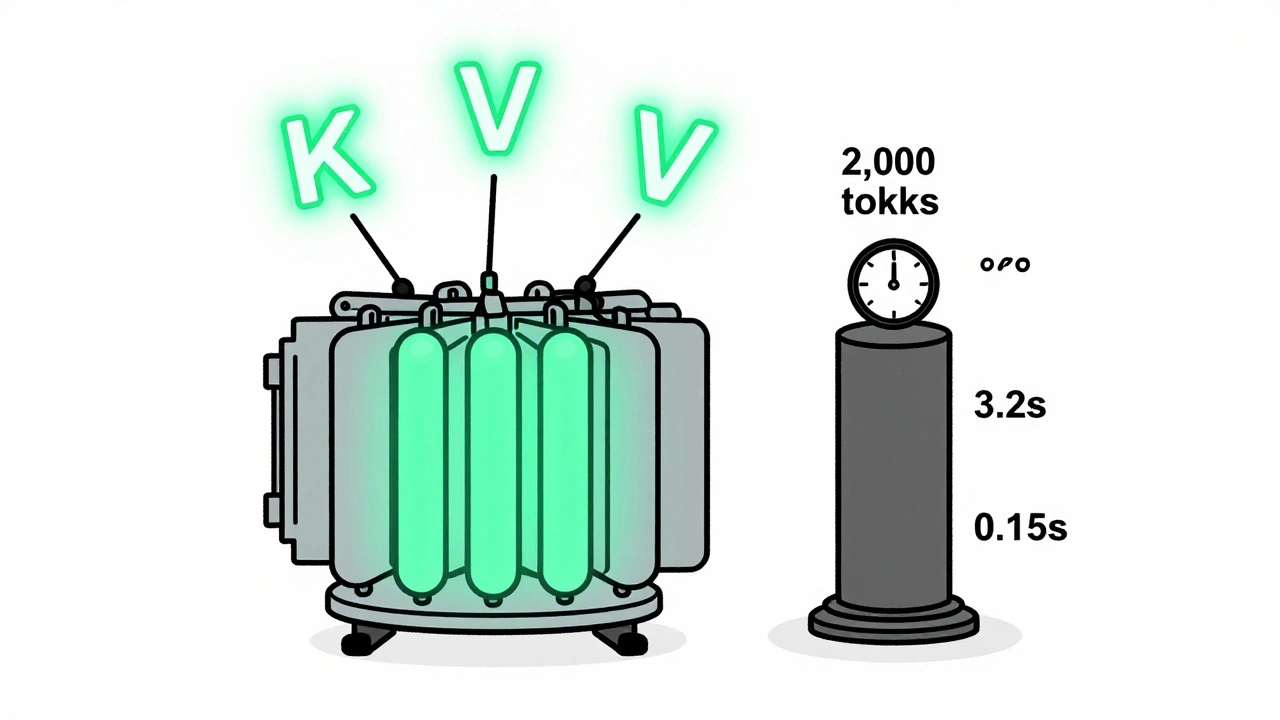

KV caching and continuous batching are essential for fast, affordable LLM serving. Learn how they reduce memory use, boost throughput, and enable real-world deployment on consumer hardware.

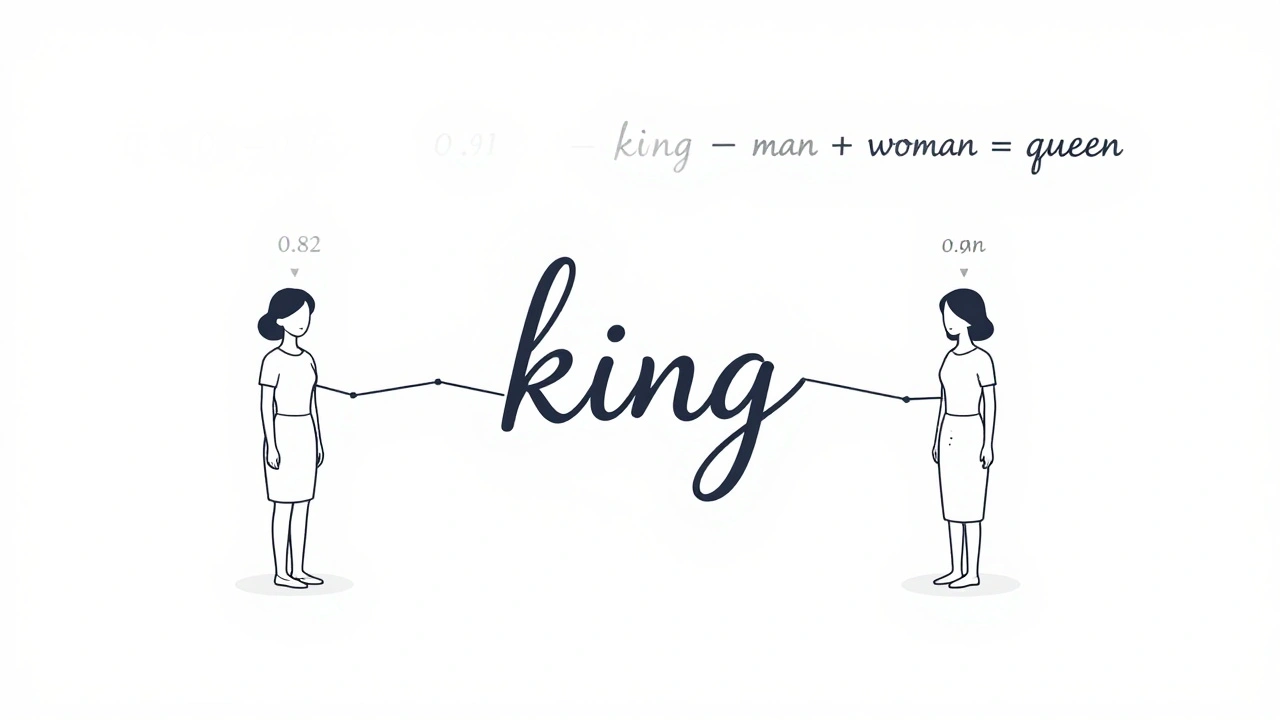

Learn how embeddings, attention, and feedforward networks form the core of modern large language models like GPT and Llama. No jargon, just clear explanations of how AI understands and generates human language.

Government agencies are now procuring AI coding tools with strict SLAs and compliance requirements. Learn how contracts for AI CaaS differ from commercial tools, what SLAs are mandatory, and how agencies are avoiding costly mistakes in 2025.

Categories

Archives

Recent-posts

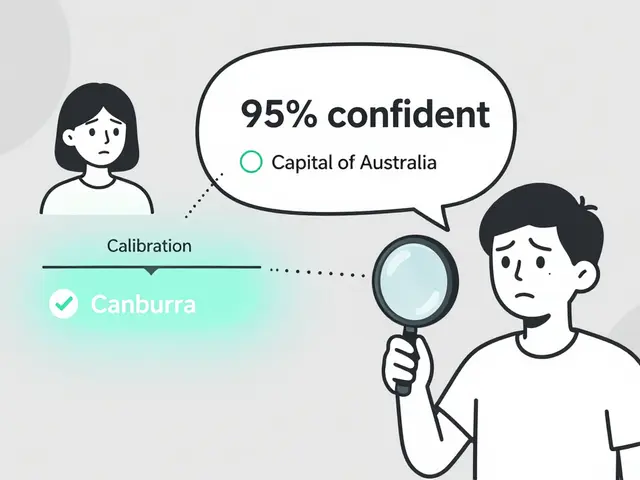

Token Probability Calibration in Large Language Models: How to Fix Overconfidence in AI Responses

Jan, 16 2026

Calibration and Outlier Handling in Quantized LLMs: How to Keep Accuracy When Compressing Models

Jul, 6 2025

Localization and Translation Using Large Language Models: How Context-Aware Outputs Are Changing the Game

Nov, 19 2025

Artificial Intelligence

Artificial Intelligence