PCables AI Interconnects - Page 2

This article explains how AI-generated dark patterns manipulate users, with real examples and regulations. Learn a 5-step ethical audit to prevent deceptive designs and protect digital trust.

Discover why traditional metrics fail for compressed LLMs and how modern protocols like LLM-KICK and EleutherAI LM Harness accurately assess performance. Learn key dimensions, implementation steps, and future trends in model compression evaluation.

Discover how rotary embeddings, ALiBi, and memory mechanisms enable AI models to handle up to 1 million tokens. Learn key differences, real-world impacts, and future trends in long-context AI.

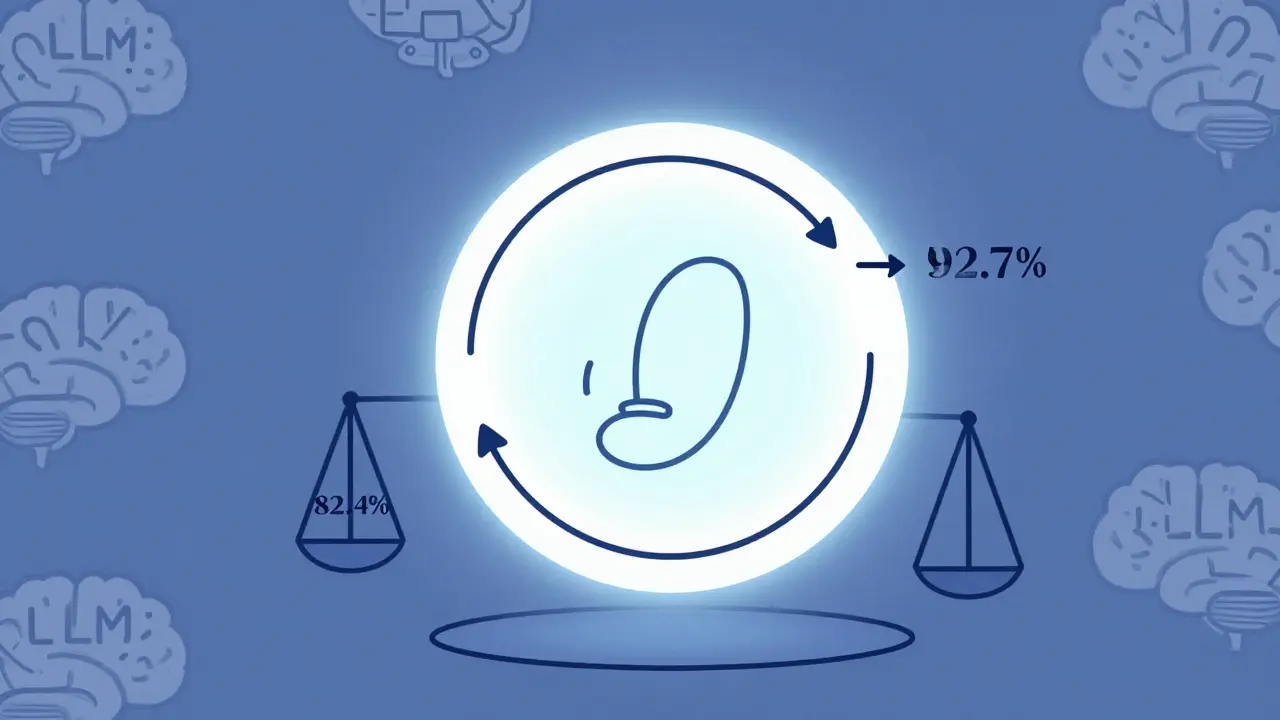

Reinforcement Learning from Prompts (RLfP) automates prompt optimization using feedback loops, boosting LLM accuracy by up to 10% on key benchmarks. Learn how PRewrite and PRL work, their real-world gains, hidden costs, and who should use them.

Generative AI can now describe images for alt text, helping make the web more accessible. But accuracy gaps, especially for people with disabilities, mean human review is still essential.

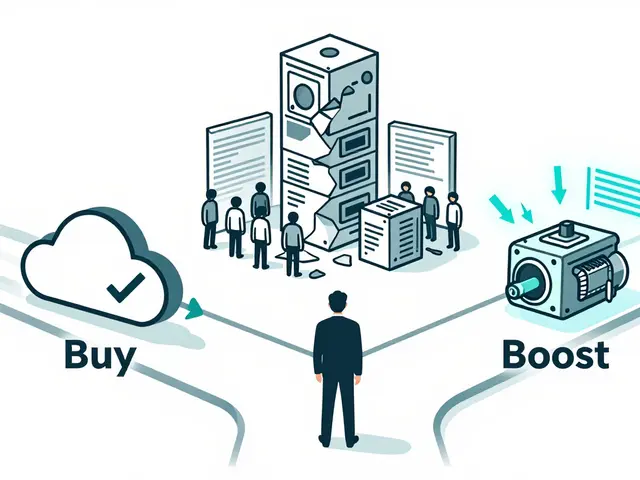

A practical guide for CIOs on choosing between building or buying generative AI platforms. Learn when to buy, boost, or build based on cost, risk, speed, and business impact - backed by 2024-2025 enterprise data.

Learn how to manage defects, technical debt, and enhancements in vibe coding using AI-assisted development. Without proper backlog hygiene, AI creates more work than it solves.

AI-generated interfaces are breaking web accessibility standards at scale. WCAG wasn’t built for dynamic AI content, and real users are paying the price. Here’s why compliance is failing-and what actually works.

LLMs memorize personal data, making traditional privacy methods useless. Learn the seven core principles and practical controls-like differential privacy and PII detection-that actually protect user data in 2026.

Vibe coding boosts weekly developer output by up to 126% by automating boilerplate, UI, and API tasks. Learn how AI-assisted tools like GitHub Copilot drive real productivity gains-and how to avoid the hidden traps of technical debt and security risks.

Modern generative AI isn't powered by bigger models anymore-it's built on smarter architectures. Discover how MoE, verifiable reasoning, and hybrid systems are making AI faster, cheaper, and more reliable in 2025.

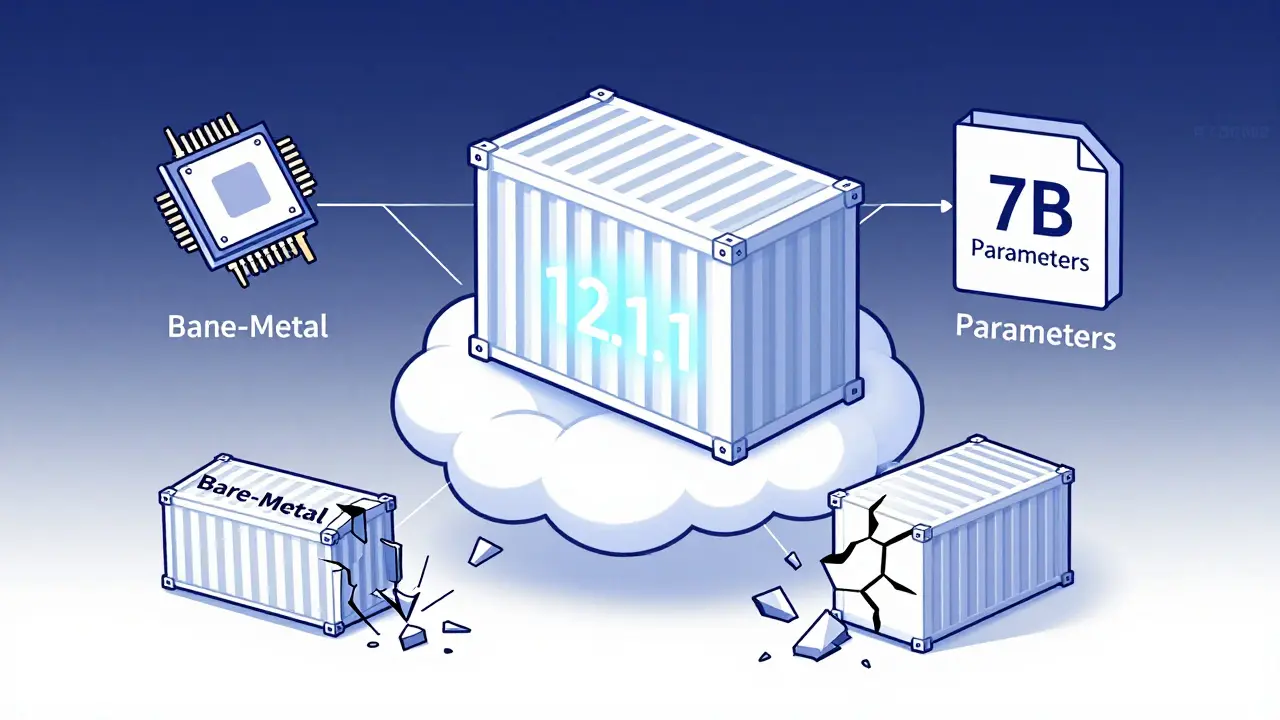

Containerizing large language models requires precise CUDA version matching, optimized Docker images, and secure model formats like .safetensors. Learn how to reduce startup time, shrink image size, and avoid the most common deployment failures.

Artificial Intelligence

Artificial Intelligence