Author: Phillip Ramos - Page 2

Vibe coding boosts weekly developer output by up to 126% by automating boilerplate, UI, and API tasks. Learn how AI-assisted tools like GitHub Copilot drive real productivity gains-and how to avoid the hidden traps of technical debt and security risks.

Modern generative AI isn't powered by bigger models anymore-it's built on smarter architectures. Discover how MoE, verifiable reasoning, and hybrid systems are making AI faster, cheaper, and more reliable in 2025.

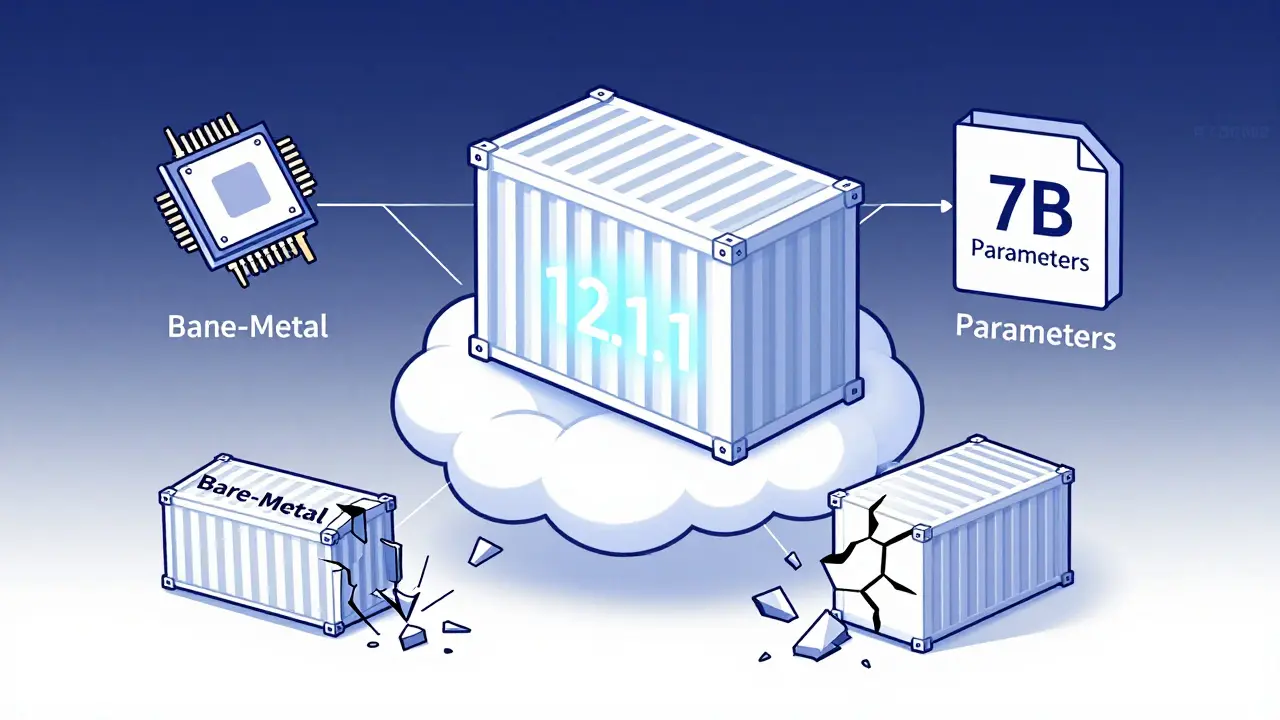

Containerizing large language models requires precise CUDA version matching, optimized Docker images, and secure model formats like .safetensors. Learn how to reduce startup time, shrink image size, and avoid the most common deployment failures.

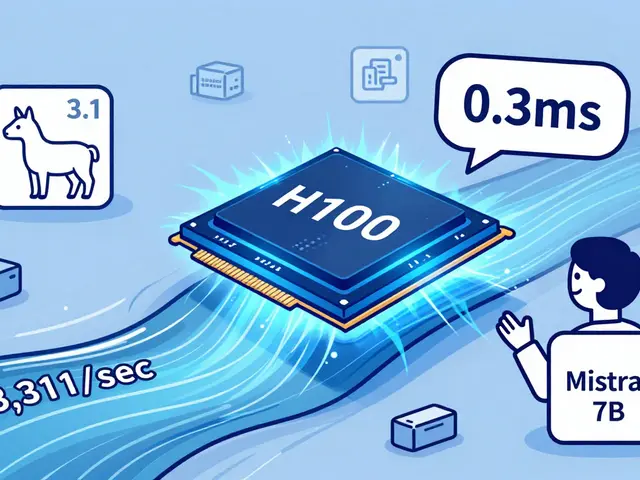

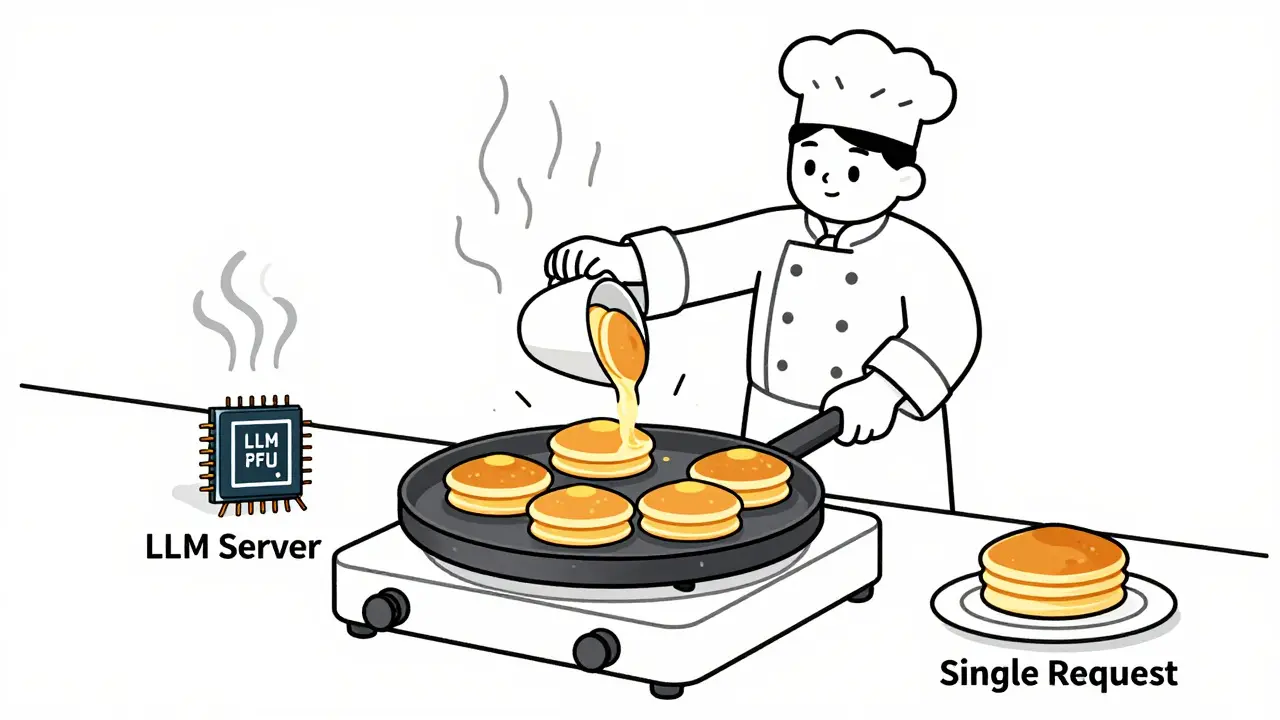

Learn how to choose optimal batch sizes for LLM serving to cut cost per token by up to 87%. Discover real-world results, batching types, hardware trade-offs, and proven techniques to reduce AI infrastructure costs.

Vibe coding lets fintech teams build tools with natural language prompts instead of code. Learn how mock data, compliance guardrails, and AI-powered workflows are changing how financial apps are developed-without sacrificing security or regulation.

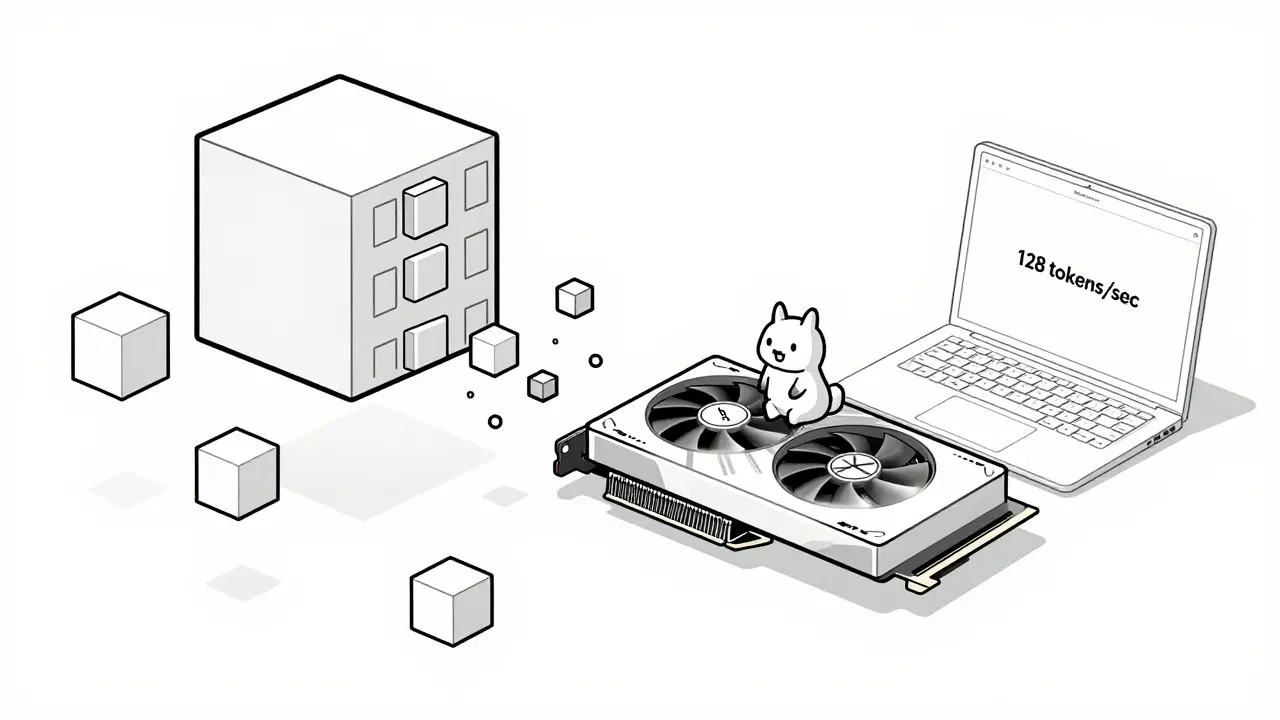

Learn how hardware-friendly LLM compression lets you run powerful AI models on consumer GPUs and CPUs. Discover quantization, sparsity, and real-world performance gains without needing a data center.

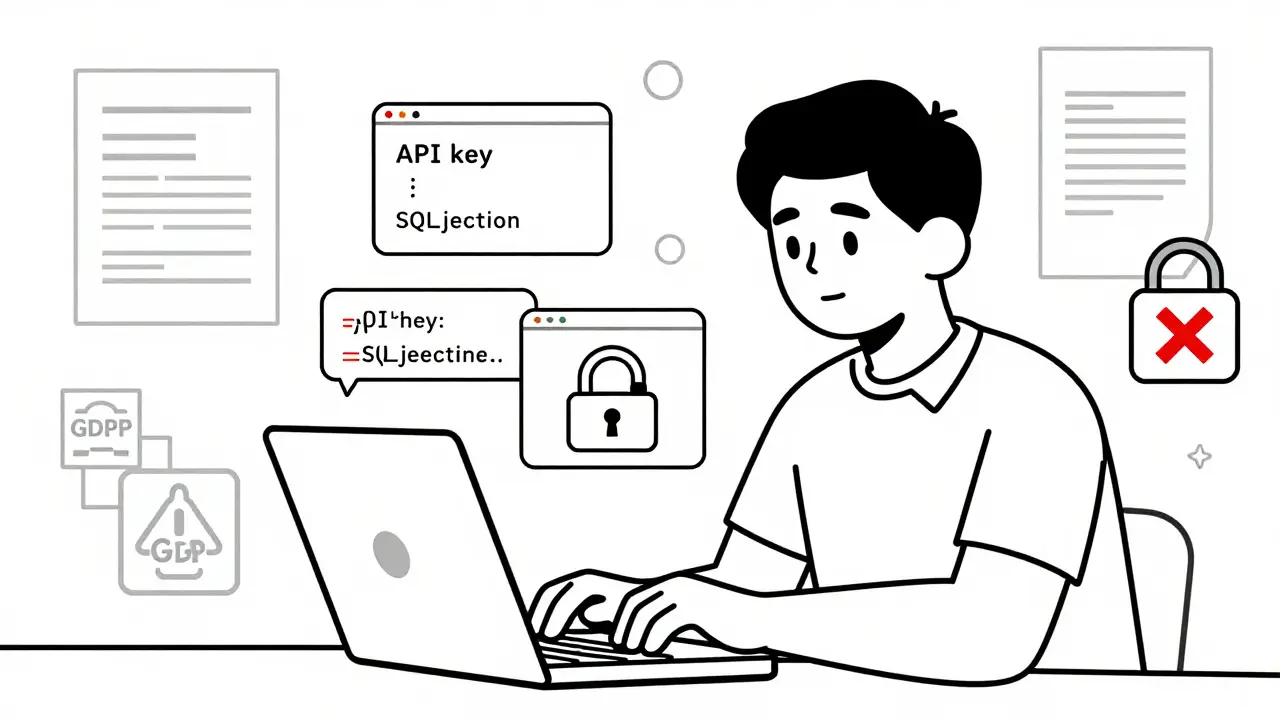

Procurement checklists for vibe coding tools must include security controls and legal terms to avoid data breaches, copyright lawsuits, and compliance fines. Learn what to demand from AI coding tools like GitHub Copilot and Cursor.

NLP pipelines and end-to-end LLMs aren't competitors-they're complementary. Learn when to use each for speed, cost, accuracy, and compliance in real-world AI systems.

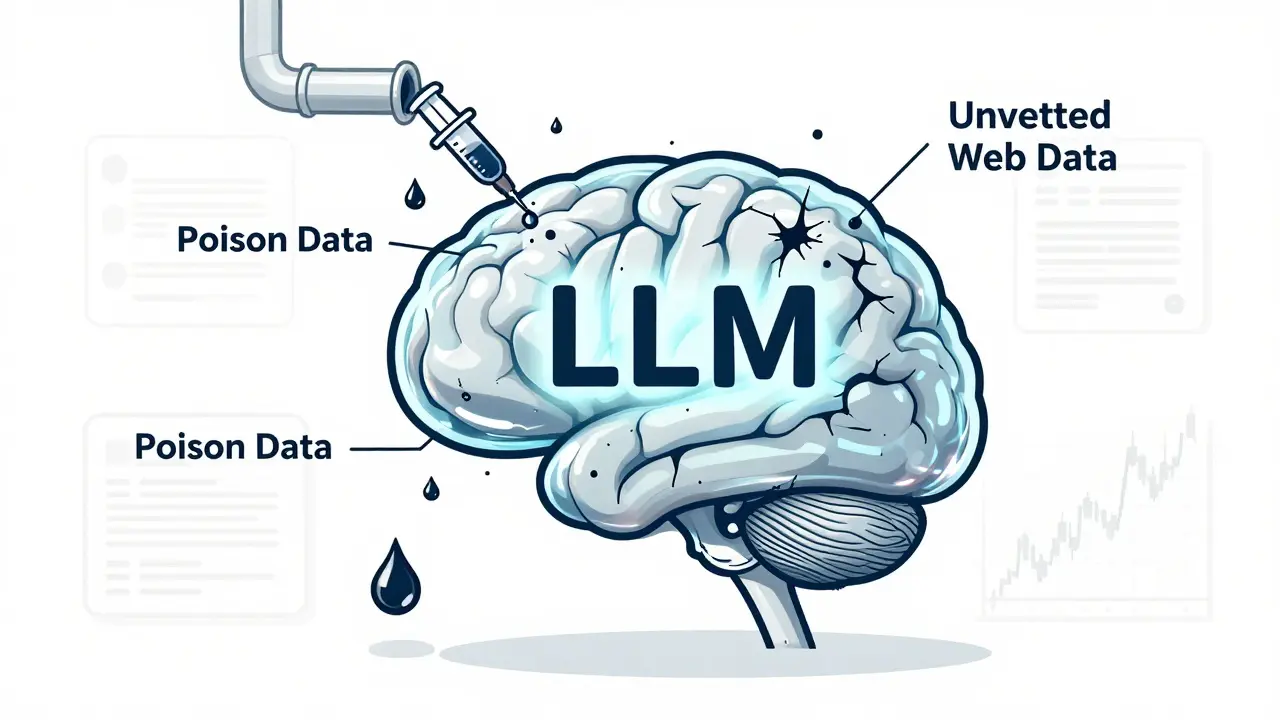

Training data poisoning lets attackers corrupt AI models with tiny amounts of fake data, leading to hidden backdoors and dangerous outputs. Learn how it works, real-world cases, and proven defenses to protect your LLMs.

Error-forward debugging lets you feed stack traces directly to LLMs to get instant, accurate fixes for code errors. Learn how it works, why it's faster than traditional methods, and how to use it safely today.

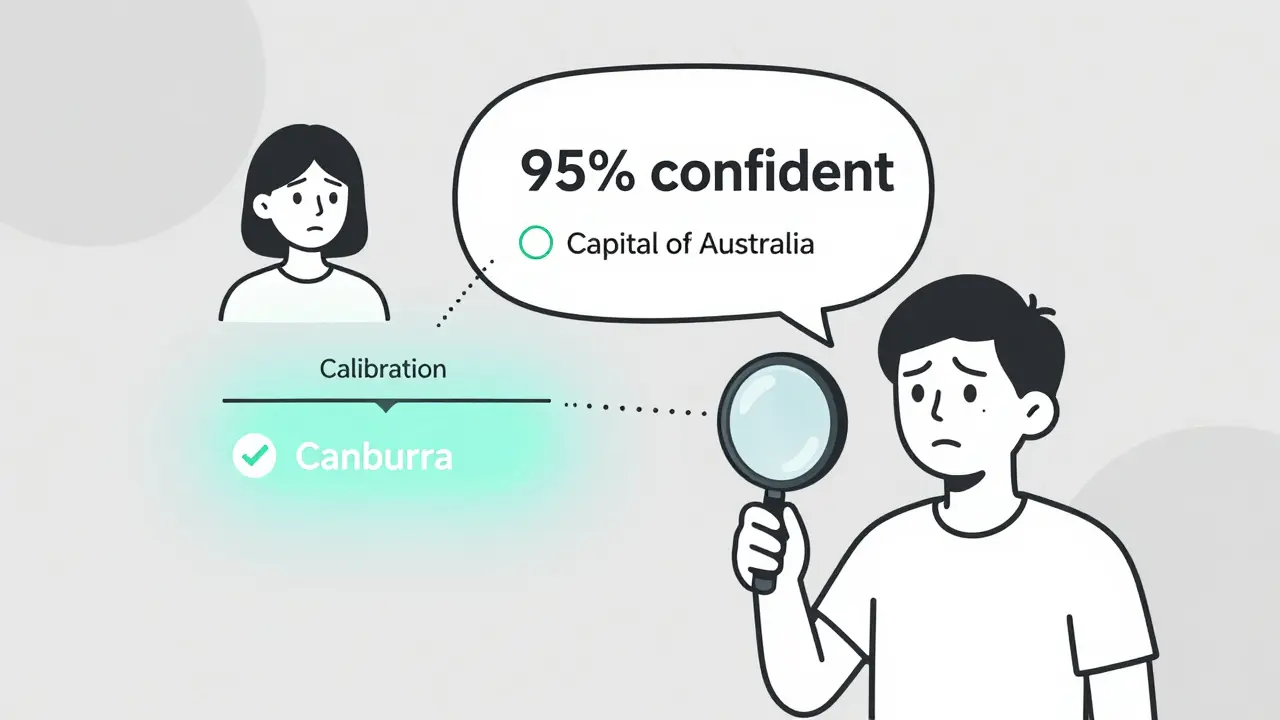

Most LLMs are overconfident in their answers. Token probability calibration fixes this by aligning confidence scores with real accuracy. Learn how it works, which models are best, and how to apply it.

LLM-powered semantic search is transforming e-commerce by understanding user intent instead of just matching keywords. See how it boosts conversions, reduces abandonment, and what you need to implement it successfully.

Artificial Intelligence

Artificial Intelligence