PCables AI Interconnects - Page 4

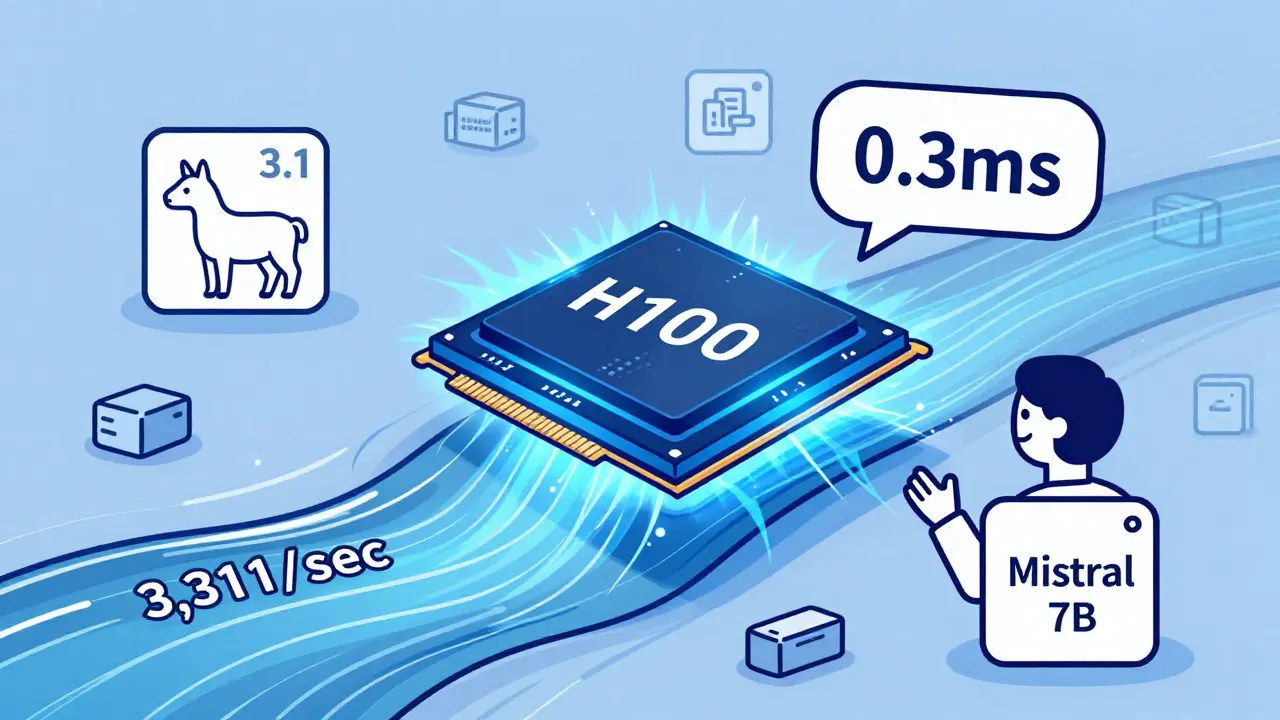

Learn how to choose between NVIDIA A100, H100, and CPU offloading for LLM inference in 2025. See real performance numbers, cost trade-offs, and which option actually works for production.

Learn how vibe-coded internal wikis and demo videos capture team culture to improve onboarding, retention, and decision-making. Discover tools, pitfalls, and real-world examples that make knowledge sharing stick.

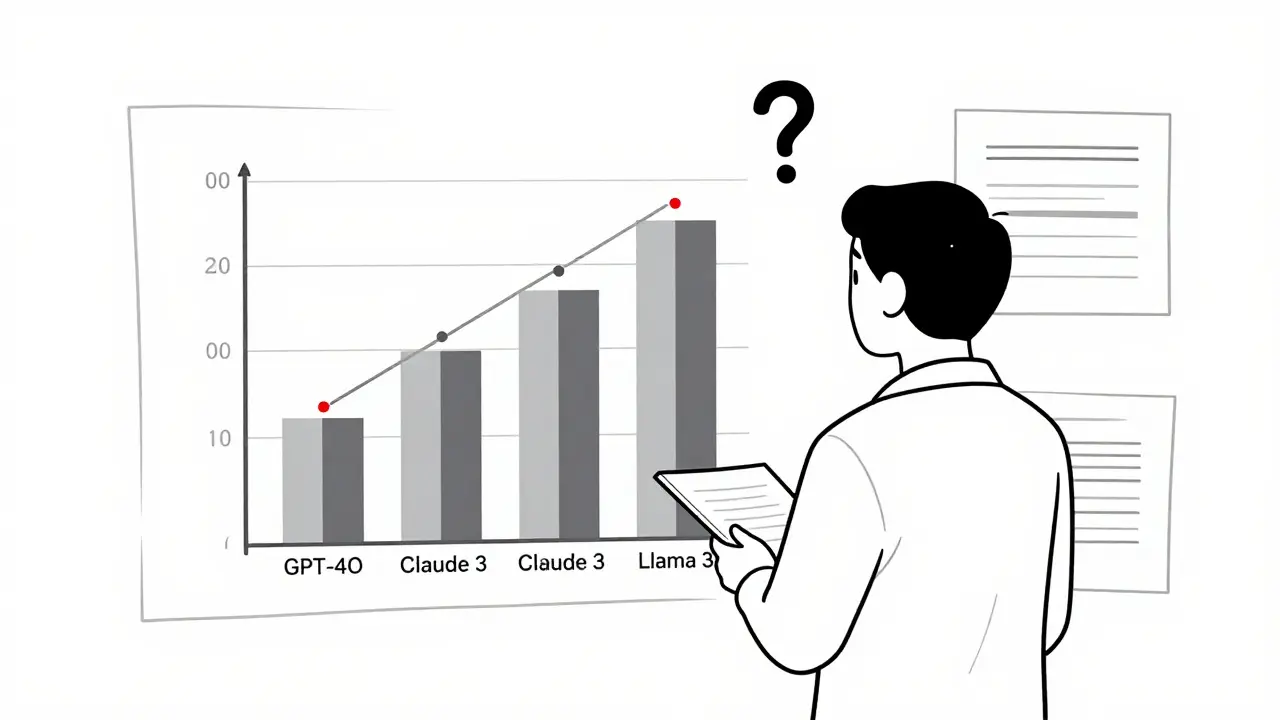

Learn how to visualize LLM evaluation results effectively using bar charts, scatter plots, heatmaps, and parallel coordinates. Avoid common pitfalls and choose the right tool for your needs.

Speculative decoding and Mixture-of-Experts (MoE) are cutting LLM serving costs by up to 70%. Learn how these techniques boost speed, reduce hardware needs, and make powerful AI models affordable at scale.

Generative AI coding assistants like GitHub Copilot and CodeWhisperer are transforming software development in 2025, boosting productivity by up to 25%-but only when used correctly. Learn the real gains, hidden costs, and how to avoid common pitfalls.

Generative AI is transforming finance teams by automating forecasting and explaining variance causes in plain language. Teams using it report 25% higher accuracy, 57% fewer forecast errors, and major time savings-without needing to be tech experts.

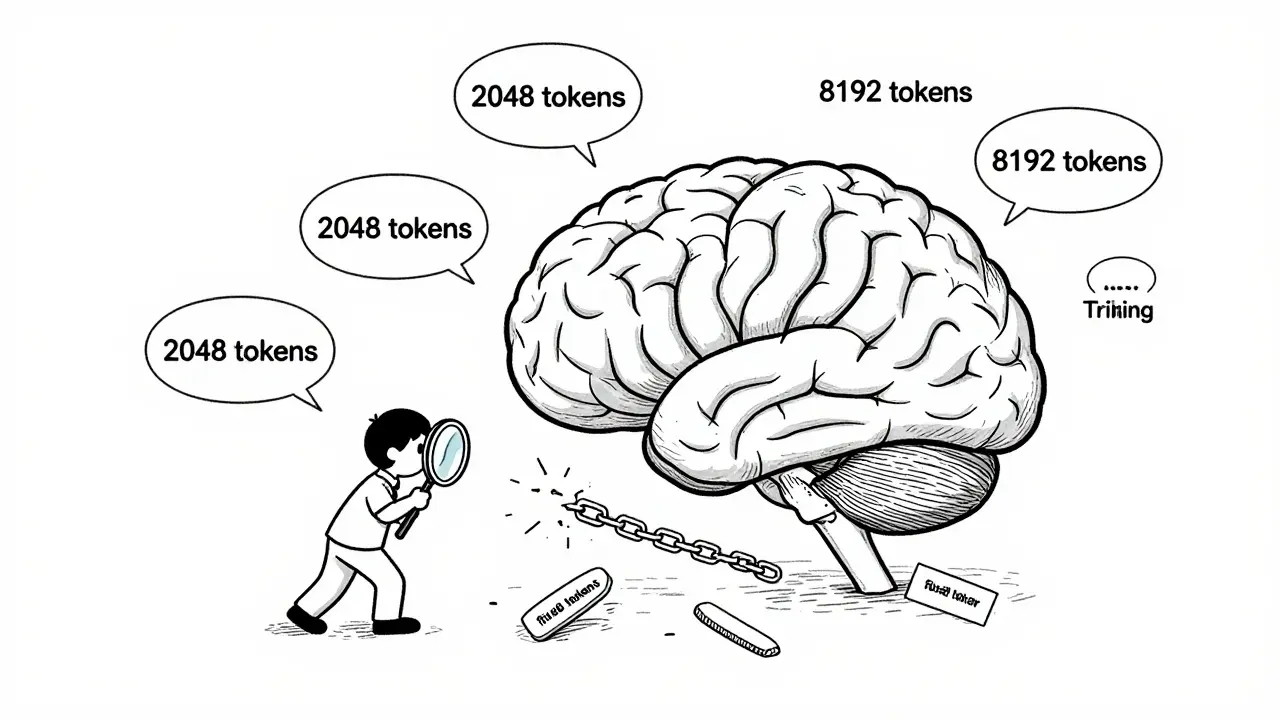

Training duration and token counts alone don't determine LLM generalization. How sequence lengths are structured during training matters more-variable-length curricula outperform fixed-length approaches, reduce costs, and unlock true reasoning ability.

Enterprise vibe coding boosts development speed but demands strict governance. Learn how to implement security, compliance, and oversight to avoid costly mistakes and unlock real productivity gains.

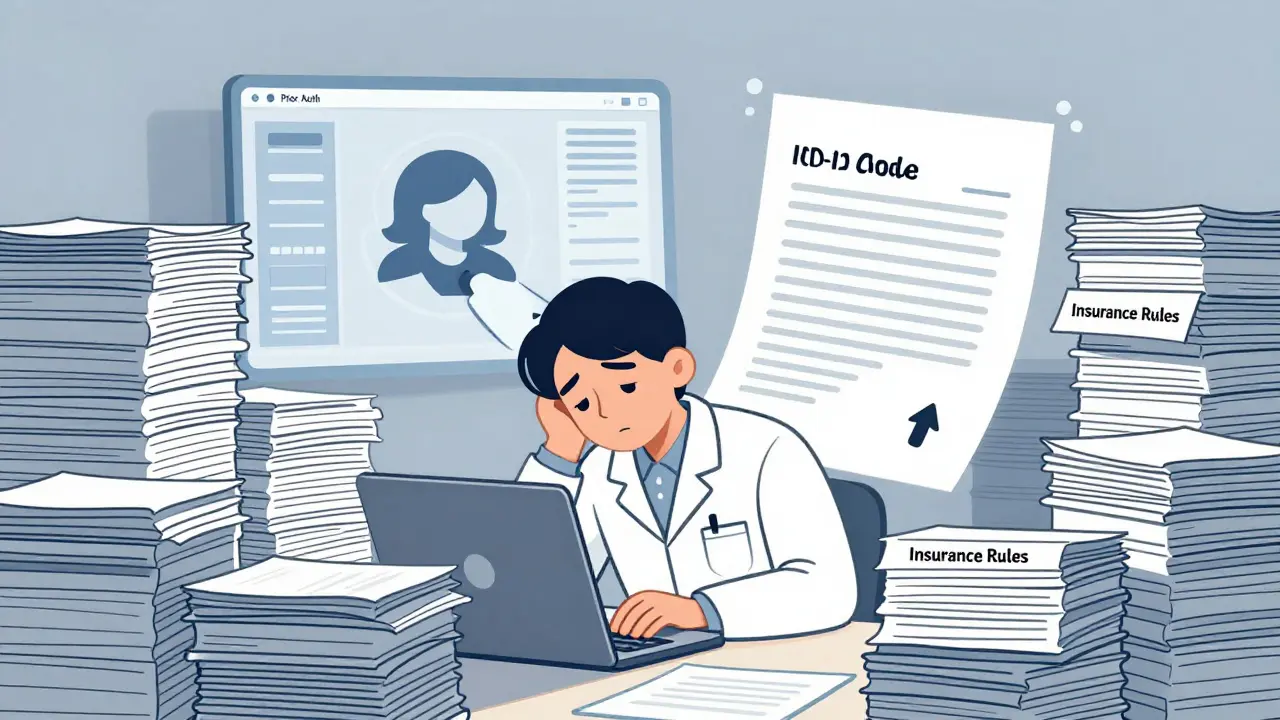

Generative AI is cutting prior authorization and clinical summary times by up to 70% in healthcare systems. Learn how AI tools like Nuance DAX and Epic Samantha are reducing administrative burnout, cutting denials, and saving millions - with real results from 2025.

Caching is essential for AI web apps to reduce latency and cut costs. Learn how to start with prompt caching, semantic search, and Redis to make your AI responses faster and cheaper.

Chunking strategies determine how well RAG systems retrieve information from documents. Page-level chunking with 15% overlap delivers the best balance of accuracy and speed for most use cases, but hybrid and adaptive methods are rising fast.

Disaster recovery for large language models requires specialized backups and failover strategies to protect massive model weights, training data, and inference APIs. Learn how to build a resilient AI infrastructure that minimizes downtime and avoids costly outages.

Artificial Intelligence

Artificial Intelligence