PCables AI Interconnects - Page 3

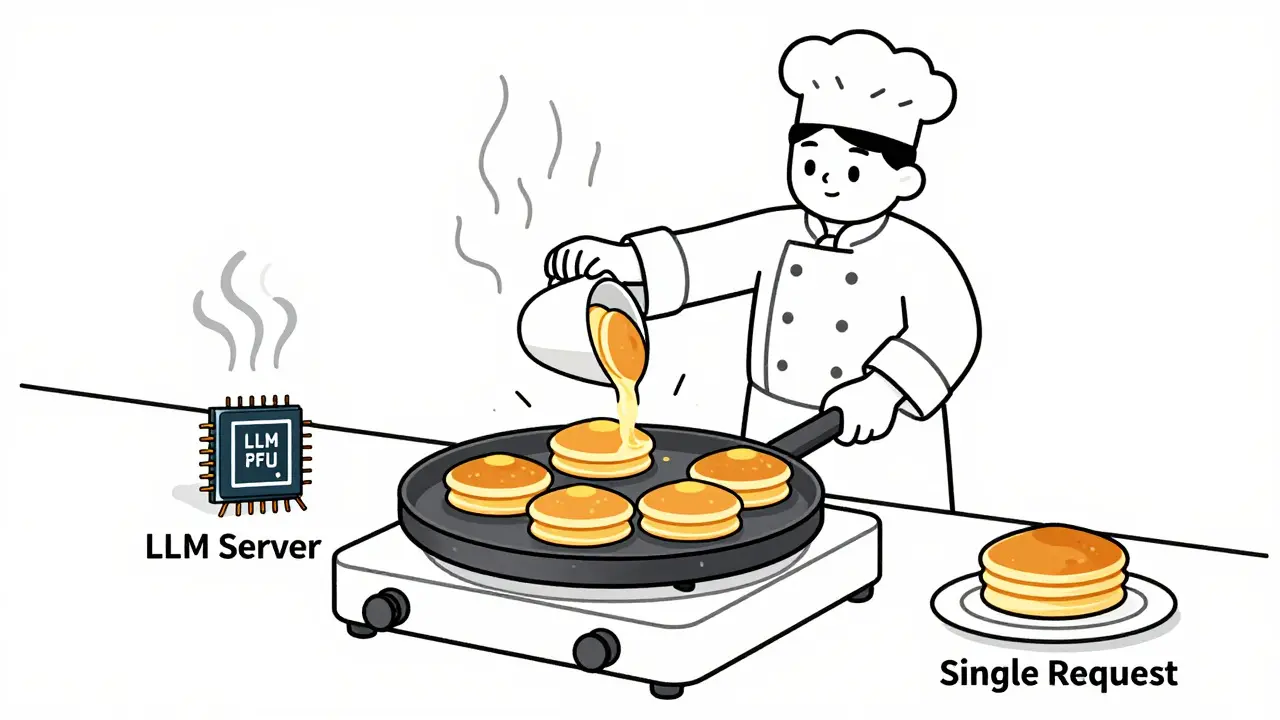

Learn how to choose optimal batch sizes for LLM serving to cut cost per token by up to 87%. Discover real-world results, batching types, hardware trade-offs, and proven techniques to reduce AI infrastructure costs.

Vibe coding lets fintech teams build tools with natural language prompts instead of code. Learn how mock data, compliance guardrails, and AI-powered workflows are changing how financial apps are developed-without sacrificing security or regulation.

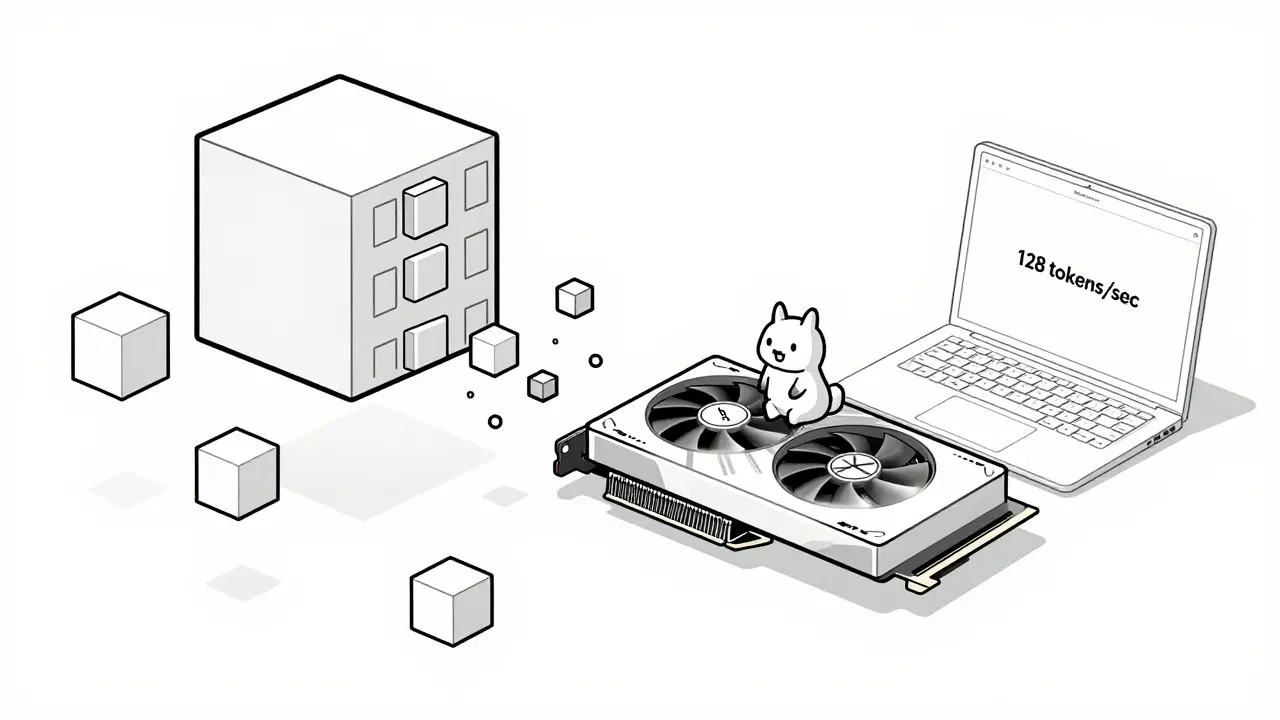

Learn how hardware-friendly LLM compression lets you run powerful AI models on consumer GPUs and CPUs. Discover quantization, sparsity, and real-world performance gains without needing a data center.

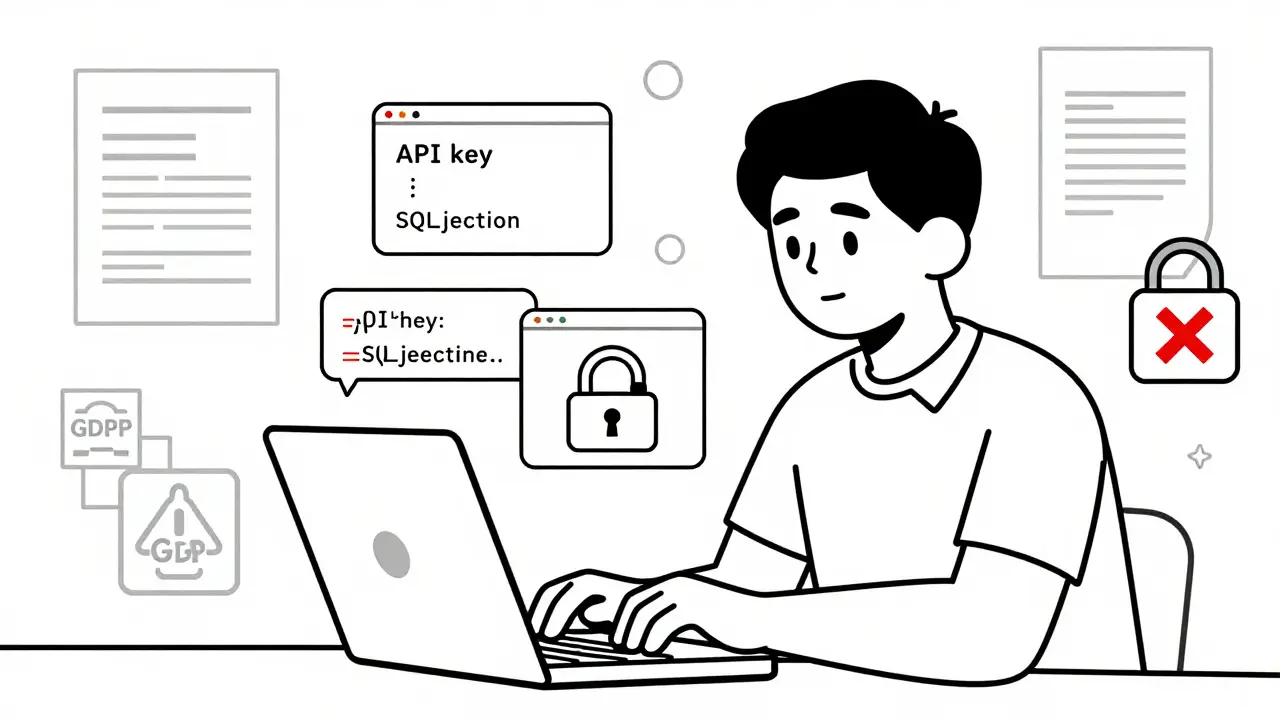

Procurement checklists for vibe coding tools must include security controls and legal terms to avoid data breaches, copyright lawsuits, and compliance fines. Learn what to demand from AI coding tools like GitHub Copilot and Cursor.

NLP pipelines and end-to-end LLMs aren't competitors-they're complementary. Learn when to use each for speed, cost, accuracy, and compliance in real-world AI systems.

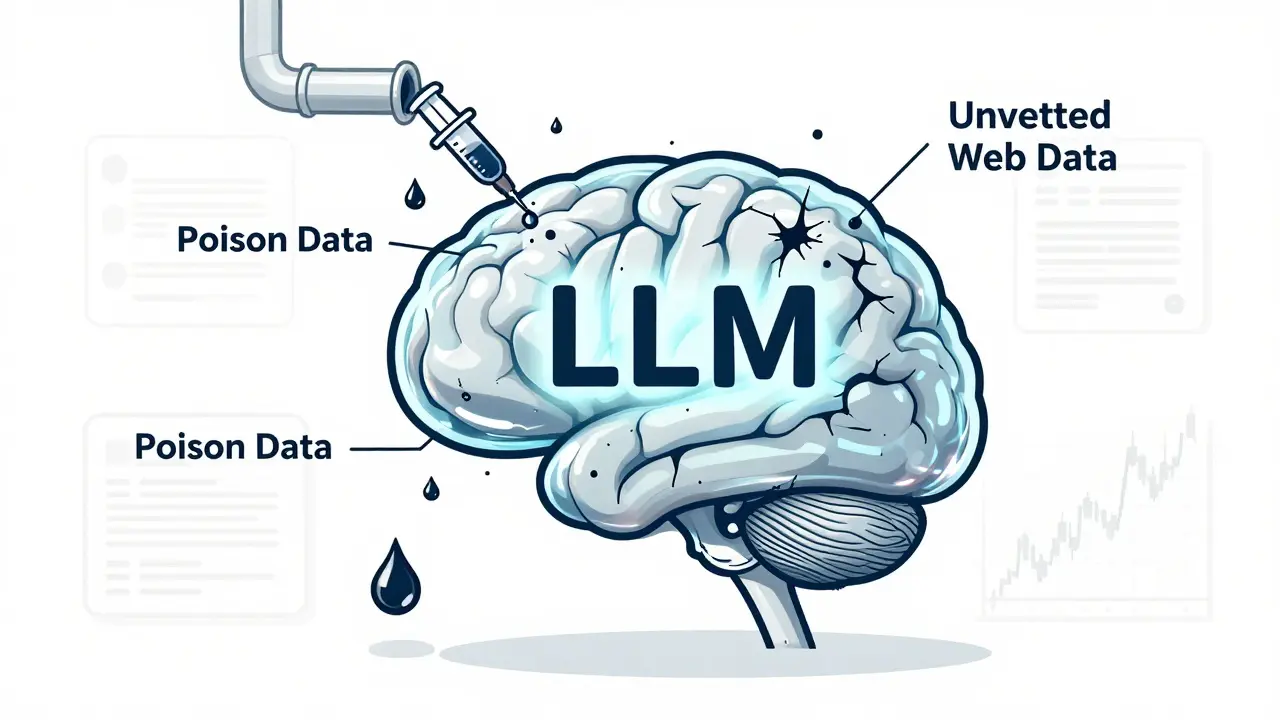

Training data poisoning lets attackers corrupt AI models with tiny amounts of fake data, leading to hidden backdoors and dangerous outputs. Learn how it works, real-world cases, and proven defenses to protect your LLMs.

Error-forward debugging lets you feed stack traces directly to LLMs to get instant, accurate fixes for code errors. Learn how it works, why it's faster than traditional methods, and how to use it safely today.

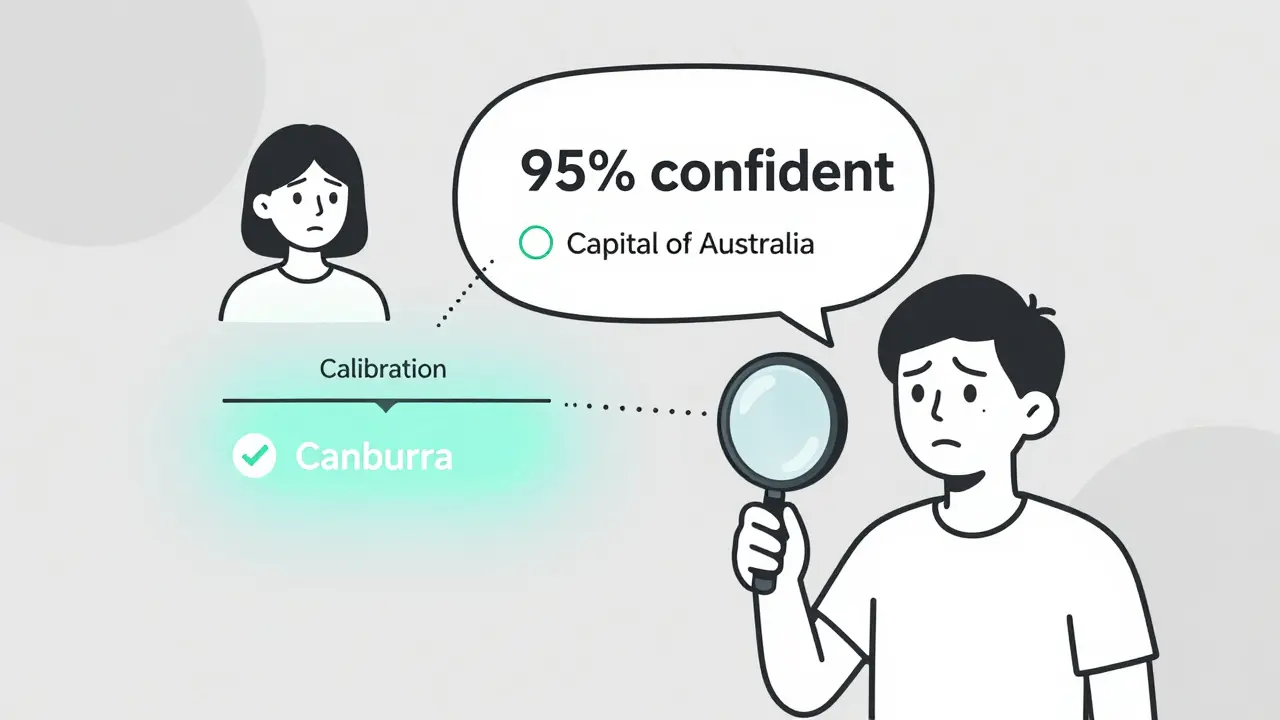

Most LLMs are overconfident in their answers. Token probability calibration fixes this by aligning confidence scores with real accuracy. Learn how it works, which models are best, and how to apply it.

LLM-powered semantic search is transforming e-commerce by understanding user intent instead of just matching keywords. See how it boosts conversions, reduces abandonment, and what you need to implement it successfully.

Pattern libraries for AI are reusable templates that guide AI coding assistants to generate secure, consistent code. Learn how they reduce vulnerabilities by up to 63% and transform vibe coding from guesswork into reliable collaboration.

Vibe coding teaches software architecture by having students inspect AI-generated code before writing their own. This method helps learners understand design patterns faster and builds deeper system-level thinking than traditional syntax-first approaches.

Performance budgets set clear limits on page weight, load time, and resource usage to keep websites fast. Learn how to define, measure, and enforce them using real tools and data to improve user experience and SEO.

Categories

Archives

Recent-posts

Marketing Content at Scale with Generative AI: Product Descriptions, Emails, and Social Posts

Jun, 29 2025

Artificial Intelligence

Artificial Intelligence